I get irrationally annoyed by dictionary ‘word of the year’ announcements. Something that is obviously of no importance makes me shout at the radio – not because of the choices (although Oxford’s ‘youthquake’ in 2017 was discreditable) but because of the concept. It’s PR gimmickry disguised as lexicography.

(Also, the announcements tend to start in mid November, which suggests an unconventional definition of ‘year’.)

But I shouldn’t get too worked up. Dictionaries are good things, and good things cost money to run, and with the shift from print to online you have to grab eyeballs any way you can. This whole word of the year thing seems to work for them. (Here I am, taking the bait…)

Anyway. Cambridge Dictionary’s Word of the First Ten-and-a-Half Months of the Year of 2023 is ‘hallucinate’. Specifically, it’s a new sense of the word. (Update 13/12: Dictionary.com has made the same choice.) The meaning we’re used to is this:

to seem to see, hear, feel, or smell something that does not exist, usually because of a health condition or because you have taken a drug

And the new one that Cambridge has added is this:

When an artificial intelligence (= a computer system that has some of the qualities that the human brain has, such as the ability to produce language in a way that seems human) hallucinates, it produces false information

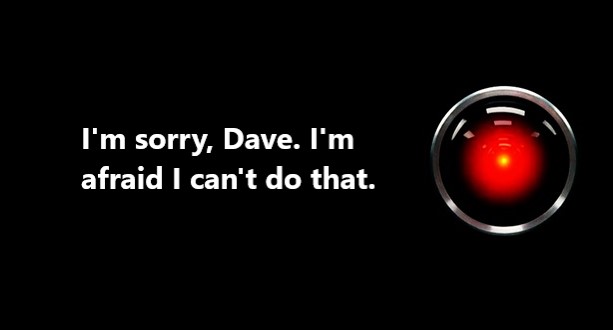

The idea is that ChatGPT and other large language models (LLMs) aren’t really writing explanations of whatever their users are asking them to explain: they’re generating things that look like explanations but might be hopelessly wrong.

This is undeniably topical, although I note that the new definition doesn’t really read like a definition in the way that the first one does. And, if you read down Cambridge’s article announcing the choice, they soon pivot to explaining why a human-compiled dictionary is superior to one generated by LLMs. What was I saying about PR disguised as lexicography…?

Anyway.

I’m not at all sure how widely ‘hallucinate’ is used in this sense at the moment, but it strikes me that there’s already an established word that does the desired job: ‘confabulate’. Cambridge defines this as:

to invent experiences or events that did not really happen

It’s often associated with brain damage or neurological disorders that affect memory, but it can happen in more mundane circumstances too. An example:

Once, about 20 years ago, I spent a weekend with a friend. On Saturday night we had a lot to drink and very little to eat. On Sunday morning we got up and walked a couple of miles to where a friend of his was laying on breakfast for a bunch of people. When we got there the food wasn’t yet ready, and so we stood around for a while. I felt hungover, very tired and extremely hungry. Having sat down on the floor for a rest, I started to stand up again, but the people suddenly around me said ‘No, stay still’ and ‘Sit back down’. I had briefly fainted. On coming to I had no memory of fainting, and so my brain quickly squirted out the first plausible explanation it could grab of why I would be sitting on the floor.

That’s confabulation. And I think it’s a better fit for what LLMs do. (In fact, Cambridge does list the new sense of ‘hallucinate’ as a synonym of ‘confabulate’.)

To hallucinate in the established sense is to falsely sense something that isn’t there. For LLMs to be hallucinating in this way, they’d have to think they were thinking when actually they weren’t. But Descartes might have something to say about that. If LLMs don’t really think, then they can’t think that they think.

The ones doing the hallucinating are us, as we read an LLM-generated text and imagine that it’s the result of intelligence rather than just pattern-recognition algorithms designed to game the Turing test. So ‘simulated intelligence’ might be a better term than ‘artificial intelligence’ (this was the gist of an article I read earlier in the year but now can’t find). (Update: still don’t know which article this was, but I think it must have been an interview with/profile of Geoffrey Hinton, who has been saying for a while that ‘confabulate’ is a better word in this context than ‘hallucinate’.)

Anyway.

I appreciate that ‘confabulate’, however apt it might be in this case, is not a well-known word and so it may not catch on as well as a less apt twist to ‘hallucinate’. We’ll see.

In conclusion: Dictionaries are good things. Use them. The more traffic they get online from genuine uses, the less they might need the gimmicks.